Introduction

On May 26, 2025, zkSecurity was engaged to perform a security audit of Phala Network’s dstack project. The audit focused on parts of two public codebases: dstack at commit be9d0476a63e937eda4c13659547a25088393394, and meta-dstack at commit 5b63aec337f19a541798970c7cf8d846171f0ca9. The audit was conducted between May 26th, and June 13th, 2025 by two consultants.

Phala Network was responsive and helpful in helping us investigate a number of leads, and they were prompt to address the different findings in meta-dstack v0.5.3 at commit 1dbed7dd6eede0a99a0ae895f7bf99146d134387.

Scope

The scope for the project was twofold:

Low-level libraries and tooling. The first part of the scope involved reviewing low-level libraries and tooling, including the following components from https://github.com/Dstack-TEE/dstack:

ra-tlsandra-rpc, implementing an augmented TLS service (which relies on an external x509 cert library which was deemed out of scope)guest-agent, a service that runs in a confidential VM (CVM) to serve a container’s key derivation and attestation requestsdstack-util, a CLI with subcommands like full-disk encryption (FDE).

Image-related files. The second part of the scope focused on image-related files for the dstack OS, such as Yocto BitBake recipes and base initialization scripts. This includes Yocto BitBake files (rootfs, initramfs, initramfs-files/init) from https://github.com/Dstack-TEE/meta-dstack/tree/main/meta-dstack/recipes-core/images and basefiles from https://github.com/Dstack-TEE/dstack

Methodologies

We approached the project in two phases. In the first phase we focused on:

- Understanding the intended attacker model and trust boundaries based on the documentation provided.

- Understanding and reviewing RA-TLS, an SGX-inspired protocol that integrates Intel SGX Remote Attestation with TLS (Integrating Intel SGX Remote Attestation with Transport Layer Security).

- Understanding internal and external CVM interfaces and the access control in place for them.

- Evaluating privilege escalation strategies and potential vulnerabilities and their impact on the intended countermeasures.

In a second phase we focused on the dstack OS images, configuration and QEMU launching of the CVM:

- Reviewing the reproducibility of builds.

- Checking if measurements reflect necessary events at the right time.

- Reviewing the differences between dev and prod images, and understanding hardening of the production image.

- Assessing the role of dm-verity in the booting process.

- Focusing on the host operator attacker model and the potential vulnerabilities operators can exploit.

Strategic Recommendations

The following recommendations are intended to strengthen the overall security posture of the system.

Documentation. Given the complexity of the system and the challenges of producing secure, minimal images, we recommend documenting both the rationale and the decisions behind the hardening of the BitBake recipes. See VMM Is Currently Trusted In OVMF Build, qemu-guest-agent Is Present In Production and more generally Lack Of Documentation On Design and Hardening Decisions In meta-dstack Layer

Further Audits. While this audit served as a solid entry point, several related areas remain unreviewed. We recommend conducting additional audits on the following components:

- dstack-kms and related flows. As Pre-Launcher Code Can Be Used To Leak Secrets On Default KMS pointed out, some KMS flows and interactions within the scope of this audit were overlooked.

- dcap-qvl. Phala Network rewrote the reference C++ implementation in Rust, which was outside the scope of this review. A dedicated audit would help ensure its correctness. See Incomplete TD Under Debug Checks, Underdocumented Root of Trust and Vendored Attestation Code, and Lack Of Revocation Checks In Quote Verification Library.

Overview

Architecture Overview

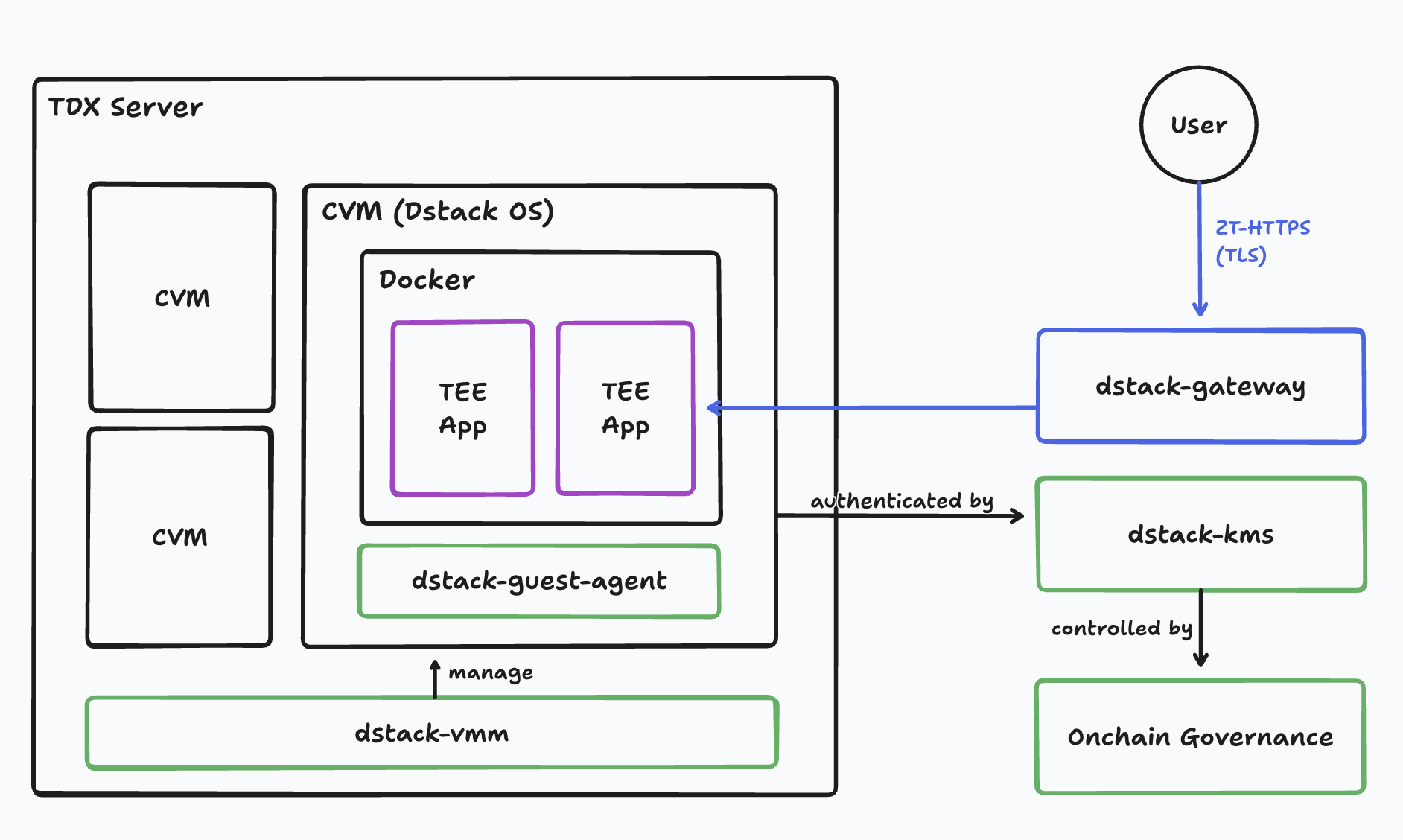

The architecture overview of Dstack is depicted in the following image from their official repository:

CVM (dstack-os). The Confidential Virtual Machine (CVM) serves as the primary virtualized environment within the Intel TDX (Trust Domain Extensions) framework. It runs the guest operating system known as dstack-os, which is also referred to as meta-dstack. The meta-dstack is constructed as a Yocto Project meta layer, designed to build reproducible guest image.

Within the CVM, the guest image includes a Docker runtime that is responsible for launching and managing the containerized workloads. These containers host the main application logic, making the CVM the execution environment for end-user services.

dstack-vmm. The dstack-vmm is a service operating on the bare-metal TDX host, responsible for managing the full lifecycle of the CVM. Acting as the Virtual Machine Manager (VMM), it coordinates the creation, configuration, execution, suspension, and termination of CVMs.

dstack-guest-agent. The dstack-guest-agent is an in-guest service that runs within the CVM. It is primarily responsible for handling sensitive operations such as container-specific key derivation and servicing remote attestation requests.

dstack-gateway. The dstack-gateway functions as a reverse proxy that mediates encrypted communications (via TLS connection) between the CVM and external, public-facing networks.

dstack-kms. The dstack-kms component operates as a Key Management Service (KMS) designed to generate, store, and manage cryptographic keys for CVMs.

Intel TDX Runtime Measurements

Intel TDX (Trust Domain Extensions) provides four runtime measurement registers: RTMR0, RTMR1, RTMR2, and RTMR3. These registers function similarly to TPM PCRs: they are append-only (i.e. they can be extended but not reset) and are designed to reflect the integrity of the runtime environment.

At runtime, both the firmware and guest software can extend these RTMRs by hashing data and appending it to the current register value. This process, called measuring, ensures that any change in the system’s state results in different measurement values.

The first three registers, RTMR0–RTMR2, are used for predefined components in the boot process. RTMR3 is available for applications to use during runtime (e.g., to log and prove that specific data or events occurred). In the dstack OS, RTMR3 is used to register information about the CVM, such as the compose-hash(a hash of the app_compose.json which the container stack). This is done before keys for persistent data and TLS communication are generated or received from the KMS. The idea is roughly to guarantee through RTMR0-RTMR2 that the expected dstack OS is running. RTMR3, in contrast, captures measurements related to the Docker application stack running on top of that OS.

The expected values of the registers can be computed on a machine without TDX support using the dstack-mr tool, a client-side reimplementation of the measurement logic. It can replay the measurement process and help verify expected hashes.

In addition to these registers, the MRTD (Measured Root for TDX) is initialized by the TDX module itself and contains measurements of the early firmware loaded by the hypervisor (e.g., OVMF). It’s immutable after launch and is critical for root trust. This is sometimes referred to as TDMR in earlier Intel documents.

The QEMU command below shows the components involved in booting a TDX guest:

/usr/bin/qemu-system-x86_64 \

...

-bios ovmf.fd \

-kernel bzImage \

-initrd initramfs.cpio.gz \

-drive file=rootfs.img.verity,format=raw,readonly=on \

...

-append "console=ttyS0 ... dstack.rootfs_hash=... dstack.rootfs_size=..."

Here we can see how each parameter contributes to computing the registers:

- OVMF (

-bios): Measured by the firmware and contributes to RTMR0. - Kernel and Initramfs (

-kernel,-initrd): Measured into RTMR1. - Kernel command-line (

-append): Includes critical fields such as the hash of the root filesystem and is measured into RTMR2. - Application Events: Applications can call into the guest agent (via RA RPC) to emit custom events that extend RTMR3.

The fundamental applications events recorded by dstack-util/src/system_setup.rs are:

extend_rtmr3("system-preparing", &[])?;

extend_rtmr3("app-id", &instance_info.app_id)?;

extend_rtmr3("compose-hash", &compose_hash)?;

extend_rtmr3("instance-id", &instance_id)?;

extend_rtmr3("boot-mr-done", &[])?;

These are added in order to mark distinct phases of runtime initialization before requesting/generating keys.

Together, this strategy aims at ensuring that:

- Only a trusted kernel with trusted OVMF is used.

- The kernel parameters are not modified by a malicious host.

- Only a trusted

rootfsimage is mounted. - Runtime integrity can be remotely verified.

- RA-TLS certificates can bind to an attested software state (see the RA-TLS section for more details)

In sum, proper measurement is the foundation of trust in TDX-based confidential computing environments.

RA-TLS

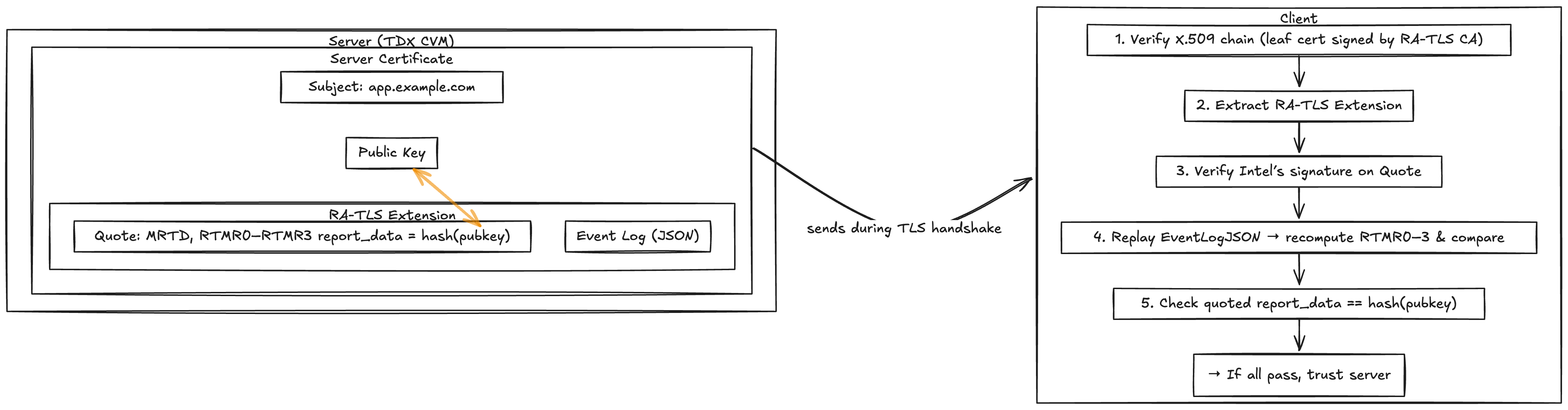

A key feature of the dstack framework is the ability of an application running inside a CVM to prove it is running a trusted dstack OS image and a particular Docker application. In order to do this using the TDX platform, it is crucial to obtain a fresh quote, which is a hardware-signed structure produced by the TDX module. This quote contains the current values of the measurement registers (MRTD and RTMR0–RTMR3), as well as a 64-byte report_data payload supplied by the caller. Because the CPU cryptographically signs those register values, any client holding Intel’s public key can verify that (a) the measurements truly came from an untampered TDX guest and (b) the included report_data is exactly what the guest intended to prove.

To build a secure channel with an application running in the CVM, dstack uses a TLS certificate that carries remote-attestation evidence. The idea—borrowed from SGX RA-TLS is to bind the TLS public key to a given attestation by requesting a quote whose report_data is the application’s public key (or a hash of it). Dstack’s implementation lives in ra-tls/src/cert.rs inside the generate_ra_cert function:

let report_data = QuoteContentType::RaTlsCert.to_report_data(&pubkey);

let (_, quote) = get_quote(&report_data, None).context("Failed to get quote")?;

let event_logs = read_event_logs().context("Failed to read event logs")?;

let event_log = serde_json::to_vec(&event_logs).context("Failed to serialize event logs")?;

let req = CertRequest::builder()

.subject("RA-TLS TEMP Cert")

.quote("e)

.event_log(&event_log)

.key(&key)

.build();

First, the code computes report_data by embedding the freshly generated TLS public key. Then it calls get_quote(report_data) to receive a signed quote over MRTD and RTMR0–3 plus that exact report_data. It also reads the runtime event log (JSON-serializable) so a verifier can recompute RTMR0–3. Finally, it builds a certificate request that includes the new private key, the raw quote blob, and the serialized event log. The CA signs this request, producing a leaf certificate that contains a custom extension with both the quote and the event log.

Concretely the extensions used for the TLS certificate are:

/// OID for the SGX/TDX quote extension.

pub const PHALA_RATLS_QUOTE: &[u64] = &[1, 3, 6, 1, 4, 1, 62397, 1, 1];

/// OID for the TDX event log extension.

pub const PHALA_RATLS_EVENT_LOG: &[u64] = &[1, 3, 6, 1, 4, 1, 62397, 1, 2];

/// OID for the TDX app ID extension.

pub const PHALA_RATLS_APP_ID: &[u64] = &[1, 3, 6, 1, 4, 1, 62397, 1, 3];

/// OID for Special Certificate Usage.

pub const PHALA_RATLS_CERT_USAGE: &[u64] = &[1, 3, 6, 1, 4, 1, 62397, 1, 4];

The resulting certificate can be presented by the application during the TLS handshake. A remote client then performs these checks in sequence: verify the X.509 signature chain (ensuring the leaf cert was issued by the trusted RA-TLS CA), extract the embedded quote and event log, verify Intel’s signature on the quote (confirming the quoted measurements came from a genuine TDX CVM), replay the JSON event log to recompute RTMR0–3 and compare those values to what the quote claims, and finally check that the quoted report_data exactly matches the certificate’s public key. If and only if all of these checks succeed can the client be confident that “this TLS endpoint is running on an untampered Dstack OS image inside a genuine TDX guest, and its TLS key is freshly generated inside that enclave.”

Binding the TLS public key into report_data is vital. Without that step, an attacker could replay a valid quote (which signs some arbitrary report_data) inside a new certificate using a different keypair, tricking clients into believing the new key was attested. Moreover, by integrating attestation into standard TLS, one can build a secure channel to the TDX CVM without developing an ad-hoc protocol.

RA-RPC

RA RPC is the internal gRPC-style interface exposed by the Dstack guest agent inside a CVM. It extends traditional remote procedure calls with attestation information, by securing the prpc channel with RA TLS. The guest agent uses RA RPC to allow containerized applications to perform attestation related operations without needing direct access to TDX hardware. For instance through GetTlsKey, DeriveKey, GetQuote and EmitEvent applications can request new cryptographic key material whose generation is bound to specific TDX measurements, obtain fresh TDX quotes (including the event log needed to replay RTMR extensions), and extend the RTMR3 register with application-defined events. All of these requests and responses are marshalled via prpc and carry the necessary payloads (e.g., TLS public keys or application data) so that the dockerized applications can verify the authenticity and integrity of the TDX guest state.

With the information obtained from the guest agent via RA RPC, apps within the CVM can establish secure channels (via RA-TLS certificates) and prove to remote clients that they are running on an unmodified, correctly measured Dstack OS image. In sum, RA RPC provides a programmatic API for leveraging TDX attestation and key derivation directly from application code running in standard Docker containers, via the guest agent.

Deployment

The deployment of a new dstack instance is performed by the VMM server. Since the service acts as a bridge between the CVM and the application developer, the security assumption is that this service is untrusted. The application developer can interacts with the VMM server from the VMM client either via browser or CLI.

The application developer needs the following configuration to deploy the application:

- App compose: stored as

app-compose.json - Instance information: stored as

.instance-info - System configuration: stored as

.sys-config.json - Environment variables: stored as

.encrypted-envin encrypted form - Application-specific configuration: stored as

.user-config

These configurations will be temporarily stored in a shared folder on the host server. When the CVM starts, it will mount this folder and load the configurations into the CVM.

Once the instance is created, the user can manage its lifecycle, including starting, shutting down, terminating, or updating its configuration.

Application compose

This is the main configuration of the application that consists of the following data structure in JSON format:

manifest_version: integer # Version (currently default to "2")

name: string # Name of the instance

runner: string # Name of the runner (currently default to "docker-compose")

docker_compose_file: string # YAML string representing docker-compose config

docker_config: object # Additional docker settings (currently empty)

kms_enabled: boolean # Enable/disable KMS

gateway_enabled: boolean # Enable/disable gateway

public_logs: boolean # Whether logs are publicly visible

public_sysinfo: boolean # Whether system info is public

public_tcbinfo: boolean # Whether TCB info is public

local_key_provider_enabled: boolean # Use a local key provider

allowed_envs: array of string # List of allowed environment variable names

no_instance_id: boolean # Disable instance ID generation

secure_time: boolean # Whether secure time is enabled

prelaunch_script: string # Prelaunch bash script that runs before starting containers

By default, the SHA256 digest of this compose content will become the application ID (app_id). Note that after created, any update to the application compose will not change the app_id.

Instance information

This is the metadata information that describe the application instance information with the following data:

app_id: The application ID, which by default is determined by the SHA256 digest of theapp-compose.json(truncated to the first 20 bytes)instance_id: The instance ID, which by default is determined by the SHA256 digest of theinstance_id_seed || app_id(truncated to the first 20 bytes). This value is empty if theno_instance_idin theapp-compose.jsonistrue.instance_id_seed: The random seed that determine the instance ID.bootstraped: The boolean value whether the instance has been initialized or not.

Note that these values are generated runtime inside the CVM when system setup is running (see system setup - stage0).

System configuration

This is system configuration that determines the external config of the VM with the following data structure in JSON format:

kms_urls: array of string # List of URL of the KMS services

gateway_urls: array of string # List of URL of the gateway services

pccs_url: string # URL of the PCCS service

docker_registry: string # URL of the docker registry

host_api_url: string # VSOCK URL of host API

vm_config: string # JSON string of the VM configuration containing os_image_hash, cpu_count, and memory_size

All values here except the cpu_count and memory_size of the vm_config are defined by the VMM server and cannot be defined from the client.

Environment variables

Dstack employs encrypted environment variables to facilitate the app developer to load secret configurable values into the CVM. Since, these variables need to be stored temporary in the host server before being loaded into the CVM, the content needs to be encrypted so that the confidentiality is not broken by the host server.

The workflow of the encryption is as follows:

- The App developer specify all the envs needed in the

app-compose.jsonvia the VMM client (Web UI or CLI) -

Before being sent to the VMM server:

- The VMM client fetches the encryption public key of the App by making RPC call to the KMS by specifying the

app_id - The KMS responses with the public key along with the ECDSA k256 signature

- The VMM client can verify the signature to verify that the signer is trusted and the resulting encryption public key is legitimate and trusted

-

With the encryption public key, the VMM client does the following:

-

Converts the given environment variables to JSON bytes

- Generates an ephemeral X25519 key pair

- Computes a shared secret using this ephemeral private key and the encryption public key

- Uses the shared key directly as the 32-byte key for AES-GCM

- Encrypts the JSON string with AES-GCM using a randomly generated IV

- The final resulting encrypted value is:

ephemeral public key || IV || ciphertext

- The VMM client fetches the encryption public key of the App by making RPC call to the KMS by specifying the

-

When the app developer deploys the App, the client will send all the required configuration along with this encrypted value to the VMM server and stored as

.encrypted-envin the shared host directory - The CVM can later retrieves the envs by does the following:

- The CVM requests the app keys directly from the KMS, giving the

env_crypt_keythat is equivalent to the private key of the encryption public key - The CVM derives the shared secret using the ephemeral public key that is attached in the encrypted value via X25519 key exchange

- The CVM performs AES-GCM decryption of the ciphertext using the derived shared secret, resulting the JSON bytes back

- The CVM parses the JSON and will only store the variable keys that are listed in the

allowed_envsin theapp-compose.json. It also performs basic check using regex to verify the validity of the value. - The final result is transformed into env-formatted file and stored in the

/dstack/.host-shared/.decrypted-env, which then later will be loaded usingapp-compose.serviceto become system-wide environment variables.

- The CVM requests the app keys directly from the KMS, giving the

Application-specific configuration

This is an optional application-specific configuration that can be accessed by the application inside the docker container. It will be stored in the /dstack/.user-config.

CVM Runtime Workflow

When the CVM instance is launched, it follows a deterministic sequence of operations to initialize, verify, and prepare the environment for secure execution of the user application.

Booting

The VMM server initiates the CVM using QEMU with a set of predefined parameters described in the TDX Runtime Measurements. The system hardware and boot environment are initialized using the firmware specified in the ovmf.fd file. Subsequently, the kernel image (bzImage) is loaded by OVMF.

Upon kernel execution, it extracts and runs the initial userland defined in initramfs.cpio.gz. This initramfs is responsible for mounting the root filesystem (rootfs.img.verity) and verifying its integrity using dm-verity, based on the rootfs hash provided in the filename. The kernel command-line arguments passed via -append are interpreted and executed by the /init script.

System Setup

Once the operating system has fully booted, systemd triggers the dstack-prepare service to set up the system prior to application startup. This setup is executed by the dstack-util setup tool, which runs in two main phases: stage0 and stage1.

Stage 0

This phase primarily focuses on preparing the CVM’s file system and performing application-info measurements.

1. Copy and read shared files

Configuration files generated during the deployment phase are mounted into the CVM from the shared host folder as read-only, then copied into the /dstack directory.

All files, except .encrypted-env and .user-config are deserialized from JSON and parsed into internal structures:

app-compose.json->app_compose.instance-info->instance_info.sys-config.json->sys_config

2. Measure app info

- The SHA256 hash of

app_composeis computed ascompose_hash. If themr_config_idis defined (non-zero) in the initial attestation quote, this hash must match it. A mismatch results in setup failure. - Empty values in

instance_infoare generated using mechanisms defined in instance information. -

Measurements are sequentially extended into RTMR3 with the following event sequence:

system-preparing-> zero valueapp-id->instance_info.app_idcompose-hash->compose_hashinstance-id->instance_info.instance_idboot-mr-done-> zero value

3. Request app keys

Application-specific keys are requested from a KMS or a local Intel SGX key provisioner (if KMS is not enabled). The retrieved keys include:

- disk_crypt_key: Used for full disk encryption

- env_crypt_key: X25519 private key for decrypting environment variables

- k256_key: ECDSA private key for digital signatures

- k256_signature: ECDSA signature signed by the root k256 key

- gateway_app_id: Application ID used by the gateway reverse proxy

- ca_cert: TLS CA certificate for secure HTTPS communication

- key_provider: Details about the key provider config (KMS or local)

These values are saved in /dstack/.appkeys.json.

4. Mount data disk

To protect persistent storage from host access, dstack uses dm-crypt LUKS2 full disk encryption. The disk_crypt_key is used to encrypt and decrypt the data volume.

If this is the first initialization of the CVM, the disk is formatted using:

echo -n $disk_crypt_key | cryptsetup luksFormat --type luks2 --cipher aes-xts-plain64 --pbkdf pbkdf2 -d- /dev/vdb dstack_data_disk

Then, the encrypted disk will be opened and mounted using zfs file system.

After successful completion, the setup logs a final system-ready event (with zero value) into RTMR3, indicating that the system is fully initialized.

Stage 1

1. Unseal encrypted envs

The encrypted environment variables are decrypted using the env_crypt_key, as described in environment variables, and stored in /dstack/.host-shared/.decrypted-env.

2. Setup guest agent

The setup writes the configuration file (stored in /dstack/agent.json) of the dstack-guest-agent service with the following data based on the user configuration in app_compose and sys_config:

{

"default": {

"core": {

"app_name": app_compose.name,

"public_logs": app_compose.public_logs,

"public_sysinfo": app_compose.public_sysinfo,

"pccs_url": sys_config.pccs_url,

"data_disks": mount_point,

}

}

}

3. Setup dstack gateway

In order to communicate with the network outside the CVM securely, it will set up a secure connection using WireGuard. In this process, it will set up the key and certificate of the WireGuard and then try to register the CVM to the defined gateway URLs in the configuration. Based on the gateway response, it constructs the WireGuard config and iptables firewall rules.

4. Setup docker registry

If supplied, the setup will try to use a custom Docker registry URL specified in the app_compose config (along with Docker credentials such as username and token). If present, it will update the Docker configuration by updating the JSON config in /etc/docker/daemon.json. Later, Docker will use the specified credentials and registry mirror when pulling images.

Application compose

After everything is set up, the CVM will start app-compose.service, which mainly tries to start the Docker service with the main application.

First, it reads all config from the .sys-config.json file and sets PCCS_URL as an environment variable (if present). Then, it will execute the pre-launch script that is defined in the app-compose.json with the source command. After the pre-launch script finishes, it removes all orphan containers and restarts the Docker service, then launches the Docker container using the compose file specified in the app-compose.json in detached mode.

Guest agent service

After the main application is launched via Docker container, the dstack-guest-agent service is started using the configuration from agent.json. Then, the end user can start interacting with the application.

Meta-dstack

Overview of the build

The meta-dstack repository uses Yocto to build a UEFI firmware image, a kernel image, an initram filesystem, and root filesystems (one for development and one for production environments):

The main entry point is reprobuild.sh which launches a Docker container and use it to run the repo’s build.sh script. The Docker container makes it easier to create a reproducible environment.

The command ran is build.sh guest ./bb-build with environment variable DSTACK_TAR_RELEASE=1. It then produces artifacts that it moves to the /dist folder on the host.

The build uses a default config file (unless a build-config.sh is created):

# DNS domain of kms rpc and dstack-gateway rpc

# *.1022.kvin.wang resolves to 10.0.2.2 which is the IP of the host system

# from CVMs point of view

KMS_DOMAIN=kms.1022.kvin.wang

GATEWAY_DOMAIN=gateway.1022.kvin.wang

# CIDs allocated to VMs start from this number of type unsigned int32

VMM_CID_POOL_START=$CID_POOL_START

# CID pool size

VMM_CID_POOL_SIZE=1000

VMM_RPC_LISTEN_PORT=$BASE_PORT

# Whether port mapping from host to CVM is allowed

VMM_PORT_MAPPING_ENABLED=true

# Host API configuration, type of uint32

VMM_VSOCK_LISTEN_PORT=$BASE_PORT

KMS_RPC_LISTEN_PORT=$(($BASE_PORT + 1))

GATEWAY_RPC_LISTEN_PORT=$(($BASE_PORT + 2))

GATEWAY_WG_INTERFACE=dgw-$USER

GATEWAY_WG_LISTEN_PORT=$(($BASE_PORT + 3))

GATEWAY_WG_IP=10.$SUBNET_INDEX.3.1

GATEWAY_SERVE_PORT=$(($BASE_PORT + 4))

GATEWAY_CERT=

GATEWAY_KEY=

BIND_PUBLIC_IP=0.0.0.0

GATEWAY_PUBLIC_DOMAIN=app.kvin.wang

# for certbot

CERTBOT_ENABLED=false

CF_API_TOKEN=

CF_ZONE_ID=

ACME_URL=https://acme-staging-v02.api.letsencrypt.org/directory

The build.sh scripts eventually runs the repo’s Makefile in this way:

if [ -z "$BBPATH" ]; then

source $SCRIPT_DIR/dev-setup $1

fi

make -C $META_DIR dist DIST_DIR=$IMAGES_DIR BB_BUILD_DIR=${BBPATH}

It first check whether BBPATH is set or not. If not set, it will source a script called ./dev-setup that will initialize Bitbake environment and sets BBPATH.

Later, BBPATH is passed as BB_BUILD_DIR to the make command along with the META_DIR and IMAGES_DIR as repo path and path to an images subfolder, respectively.

./dev-setup simply adds all the layers to the build folder (./bb-build/conf/bblayers.conf in this case) by calling bitbake-layers add-layer:

LAYERS="$THIS_DIR/meta-confidential-compute \

$THIS_DIR/meta-openembedded/meta-oe \

$THIS_DIR/meta-openembedded/meta-python \

$THIS_DIR/meta-openembedded/meta-networking \

$THIS_DIR/meta-openembedded/meta-filesystems \

$THIS_DIR/meta-virtualization \

$THIS_DIR/meta-rust-bin \

$THIS_DIR/meta-security \

$THIS_DIR/meta-dstack"

# needed to initialize bitbake binaries

source $OE_INIT $BUILD_DIR

bitbake-layers add-layer $LAYERS

(Note that here the default bb-build/conf/local.conf is used.)

The following python scripts are added to PATH. Those scripts do not end up in the BitBake image, but are useful to quickly deploy and interact with recently created images.

scripts/bin

├── dstack -> dstack.py

├── dstack.py

├── host_api.py

└── lsproc.py

The Makefile is relatively small, calling bitbake to build five images:

export BB_BUILD_DIR

export DIST_DIR

DIST_NAMES ?= dstack dstack-dev

ROOTFS_IMAGE_NAMES = $(addsuffix -rootfs,${DIST_NAMES})

all: dist

dist: images

$(foreach dist_name,${DIST_NAMES},./mkimage.sh --dist-name $(dist_name);)

images:

bitbake virtual/kernel dstack-initramfs dstack-ovmf $(ROOTFS_IMAGE_NAMES)

As a result, five images are built:

dstack-ovmf: The UEFI firmware used to boot the kernel.virtual/kernel: The linux kernel tweaked to support TDX.dstack-initramfs: The first (and temporary) filesystem that the kernel will mount in volatile memory.- Two rootfs images,

dstack-rootfsfor production anddstack-dev-rootfsfor development

The production dstack-rootfs image is a minimal root filesystem built for production. It strips out shells, SSH servers, getty services, and most debug or development tools, and only contains the bare packages needed to run Dstack guests. The development dstack-dev-rootfs image inherits everything in the prod image plus additional convenience packages (e.g. SSH/getty support, shells, editors, networking and debugging utilities) and so developers can quickly SSH in, edit files, and troubleshoot without rebuilding the image.

Layers

The following is a list of Yocto layers included in the build environment, as shown by the bitbake-layers show-layers command. The priority column indicates the precedence of the layer when multiple layers provide the same metadata.

| Layer | Path | Priority |

|---|---|---|

| core | meta-dstack/poky/meta | 5 |

| yocto | meta-dstack/poky/meta-poky | 5 |

| yoctobsp | meta-dstack/poky/meta-yocto-bsp | 5 |

| confidential-compute | meta-dstack/meta-confidential-compute | 20 |

| openembedded-layer | meta-dstack/meta-openembedded/meta-oe | 5 |

| meta-python | meta-dstack/meta-openembedded/meta-python | 5 |

| networking-layer | meta-dstack/meta-openembedded/meta-networking | 5 |

| filesystems-layer | meta-dstack/meta-openembedded/meta-filesystems | 5 |

| virtualization-layer | meta-dstack/meta-virtualization | 8 |

| rust-bin-layer | meta-dstack/meta-rust-bin | 7 |

| security | meta-dstack/meta-security | 8 |

| dstack | meta-dstack/meta-dstack | 20 |