Introduction

On January 22nd, 2025, Celo engaged zkSecurity to perform an audit of the Self project. The assessment lasted for three weeks and focused on one large monorepo as well as some provided documentation and a number of planned discussions with the team.

Scope

The original scope focused on the following three parts:

- Specific core dependencies. This part of the audit focused on changes to the RSA libraries used in the project, the RSA-PSS implementation, and the ECDSA implementation (including the ECC and big-int gadgets it builds upon).

- Protocol circuits and smart contracts. This part of the audit focused on the core circuits of Self used to prove the authenticity of passport data, as well as selectively disclose passport data in on-chain applications.

- Proof delegation via TEEs. This part looked at the logic involved in the delegation of user proofs to AWS Nitro enclaves, AWS’s solution to a trusted execution environment (TEE).

Note that the scope was changed during the audit to redirect some of the team’s efforts on auditing the TEE code in order to focus at reviewing fixes as well.

Strategic Recommendations

We recommend the following strategic changes to the Self project:

Strengthen Testing. We recommend expanding test coverage across the protocol, particularly by incorporating more negative testing (i.e., tests designed to fail). This approach helps uncover vulnerabilities early in development. For example, the TEE Client attestation issue (see TEE Client Doesn’t Verify Enclave Attestation) could potentially have been detected through more comprehensive testing.

Enhance Code Maturity. While addressing critical issues in core dependencies (see Big Integer zero-check is not sound) is a vital step, additional measures will help ensure long-term reliability and security. We suggest devoting further engineering resources to strengthen these dependencies, particularly regarding the big integer library used for ECDSA verification (see The big integer library used for ECDSA verification is immature).

Refine the Overall Protocol. We recommend conducting a more thorough review of the protocol from an attacker’s perspective to ensure resilience against a wide range of threats. With deeper analysis, potential vulnerabilities—such as those related to second pre-image attacks (see Second pre-image attacks on PackBytesAndPoseidon may be used to register arbitrary passports and DSC certificates), signature forgery (see Trusting the start offset of the public key inside the certificate may lead to signature forgery and invalid passport registration), and enclave impersonation (see Attestation Endpoint Allows Enclave Impersonation)—could be more effectively mitigated.

Consider a Follow-Up Audit. Given the complexity of this project and the initial time constraints, we recommend scheduling a subsequent audit once the above improvements have been implemented. A deeper assessment after protocol hardening will help confirm that both the design and implementation aspects are robust and secure.

Threat model and security assumptions

All the circuits and contracts in scope have been analyzed keeping in mind some security assumptions made by the protocol, which we briefly summarize here.

- Active authentication is not currently supported. While active authentication would be beneficial to the security of the protocol, it is not currently supported by Self (it is planned for a future version). Issues such as the fact that anyone that has at some point scanned the passport can register it on-chain before the real owner can, are known limitations and are not considered in scope.

- Issuers are assumed to be honest. The protocol assumes that the issuers are honest, and are not compromised. The security of the protocol relies on the certificate chains emitted by the nations’ CSCAs and DSCs.

- User secrets cannot be rotated or recovered. This is a limitation of the current design, which is mitigated by storing the user’s secret in the KeyChain of the mobile application. It is planned to add a recovery mechanism in the future, but currently, if the user loses their secret, they will lose access to their passport. Additionally, the phone KeyChain is trusted to be a secure storage for the user’s secret.

- Accessing the passport attestation can leak if someone registered or not. The protocol computes the passport commitment deterministically from the user’s data. This means that accessing that data (e.g., with a passport scan) can leak if someone has registered that passport or not. This is a known risk, and it is not considered in scope.

- Timing attacks are not in scope. The protocol can be subject to timing attacks, i.e., if a user registers a DSC certificate for its local issuer, and then immediately after registers a passport signed by that DSC, an observer can infer with a certain probability that the user is from that region. This issue is mitigated by assuming that the user will not immediately register their passport after registering the DSC certificate, which is explicitly recommended in the application, and assuming that the anonymity set is large enough to vanish possible correlations.

Methodology

All the circuits and contracts in scope have been manually reviewed. Additionally, circom-specific static analysis tools, as well as internal zkSecurity tools, have been employed to identify common flaws in the circuits.

For the cryptographic primitives we have also employed systematic testing using test vectors from the Wycheproof dataset for some selected configurations of key-length and hashing algorithms - for instance for RSA PKCS with 2048 bits and ECDSA with the P-256 curve - in order to reveal subtle and common implementation errors in signature verification functions. In some cases, in order to assess the validity of a logical implementation bug, we have compared the output of the Self circom circuits against OpenSSL on the same input vectors.

Overview of Self

In this section we give a brief overview of the Self project before delving into deeper discussions.

Biometric Passport Background

Biometric passports allow for two types of authentication: passive and active. All the work done by Self so far focuses on passive authentication, which is a form of authentication supported by all biometric passports.

In both cases, a passport data is indirectly signed by a Country Signing Certification Authority (CSCA) through a certificate chain that resembles the ones seen in the web public key infrastructure:

- the CSCAs representing countries sign intermediary certificates called Document Signing Certificates (DSCs)

- the DSCs sign passport data

In this typical public key infrastructure, root CAs (the CSCAs) are expected to be “cold” and subject to a high level of protection, while intermediary CAs (the DSCs) are expected to be “hot” (as they are continuously used in the issuance of new passports) and potentially rotated more often.

As such, verifying that a passport’s data is authentic involves verifying a certificate chain of two layers: a signature from the CSCA on an intermediary certificate, and a signature from the intermediary certificate on the passport data.

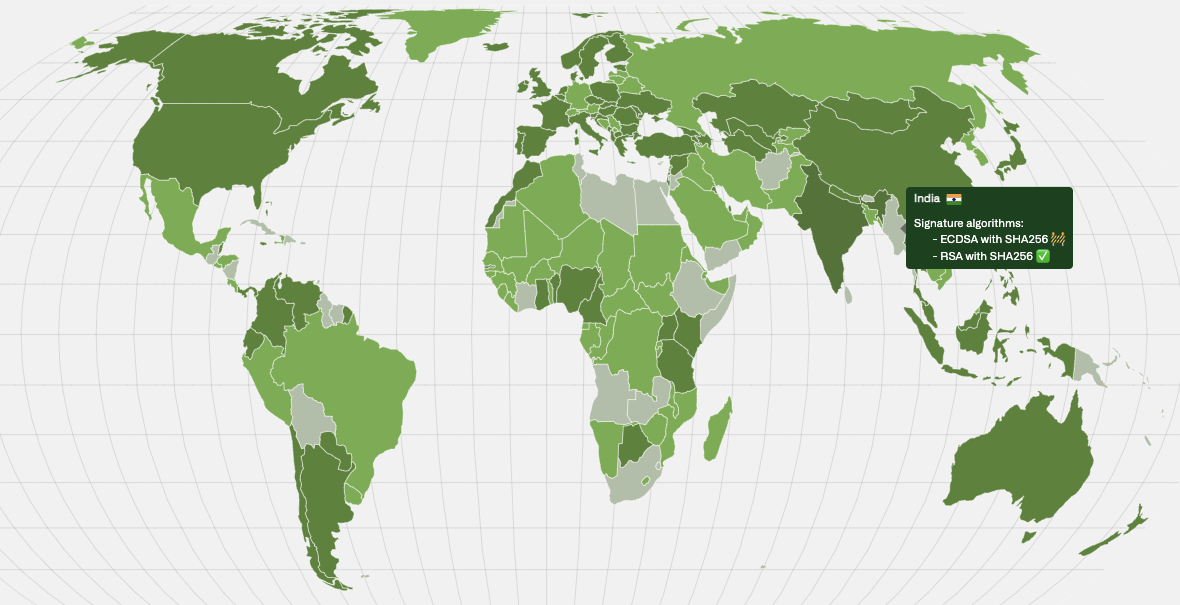

Self uses several sources to retrieve the CSCAs of different countries. Notably the ICAO, a UN entity, has a master list of root certs (CDCAs). Note in addition that different countries use different signing schemes, which are mapped on map.openpassport.app.

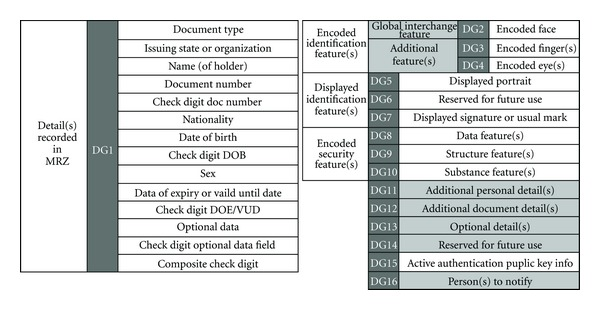

The passport data signed by a DSC is split into different data groups (DGs) where only the first two DGs are relevant in the current Self implementation:

- DG1 basically has all the information you can read in the first page of a passport

- DG2 is a hash of the passport picture which can nicely act as a unique identifier and can potentially help in authenticating the physical holder of the passport

All the data groups are hashed (and compressed into a Merkle tree) and then signed. From Anatomy of Biometric Passports :

Private Passport Disclosure Design

Following the explanations of the previous section, we see that to privately disclose the content of a passport we need to:

- verify a CSCA signature over an intermediary certificate

- verify an intermediary certificate signature over the passport data

- export some specific data (e.g. full name) or prove something specific about the passport data (e.g. person is over 18)

The current Self design goes beyond that, it splits the process into three steps:

- dsc: a user can prove that a DSC certificate is valid and generate a commitment to be stored in a Merkle tree (on-chain).

- register: a user can register (a hiding commitment to a) passport on-chain (in a Merkle tree as well) by proving that they own a valid passport (i.e. a passport that is signed by a valid DSC)

- disclose: a user can prove that there exists a registered passport, and that its content satisfies a given predicate (e.g. the person is over 18)

We go over these in more detail in a later section.

The reason for this three-step approach is that proving that signatures are valid is expensive, and one might not want to do it every time if they don’t have to. In addition, for protocols that wish to provide a “proof of person” (related to the problem of sybil resistance), Self must provide a feature that prevents passports from being used more than once.

To prevent re-registration of a passport in the first step of the flow, Self’s design has the user deterministically generate a unique identifier per-passport called a nullifier. A smart contract then stores all nullifiers seen so far, and blocks a registration attempt if its associated nullifier is already contained in the list. Hiding commitments to verified passports are then stored in a Merkle tree for easy retrieval by disclosing proofs.

Note that the commitments of valid passports stored are computed as a hash of the first two data groups, as well as some additional data. Importantly, they contain a random secret generated by the user which will become relevant later in the disclosure phase.

The smart contracts maintain three Merkle trees: one to store all the CSCA certificates supported by Self, one for all the DSCs that were proven to be part of the CSCA PKI, and one for commitments of user passports. Adding a valid intermediate certificate to the DSC certificates Merkle tree is permissionless, i.e., anyone can prove that a given certificate is valid and add it to the tree. The proof will ensure that the certificate that is added to the tree is correctly signed by a CSCA certificate that is already in the CSCA certificates Merkle tree. Subsequent registration of passport signed with a DSC certificate already in the DSC Merkle tree will use that commitment, by proving inclusion of the signer’s DCS certificate in the DSC Merkle tree.

On the other hand, a disclosing proof:

- proves that a registered passport belongs in a Merkle tree previously seen before (as the Self smart contract remembers all intermediate Merkle tree roots between passport registrations)

- then reconstructs the passport data from the commitment found in the Merkle tree

- then proves some compliance logic (e.g. the passport is not part of an OFAC sanction)

- then discloses some application-related information about the passport data (e.g. the person is over 18)

- then produces a nullifier for the application

The last point, the nullifier, is simply a hash of some opaque application-specific data (e.g. the application name) and the secret used in the registration proof. This nullifier can then be stored in the application smart contract making use of Self to prevent replay attacks. Note that as a random secret is used, this nullifier is truly randomized compared to the nullifier of the registration phase, and so does not leak anything about which passport was used in the set of registered passports.

Importantly, to prevent front running a public input (unused by the circuit) is used as application-related data that is additionally authenticated by the proof. This way a proof can, for example, include the recipient of an airdrop as part of the proof, such that network observers looking to frontrun such transactions cannot extract the proof and change the recipient to themselves.

Overview of Cryptographic Primitives Implementations

Here we give an overview of the cryptographic primitives used in the Self project. The main cryptographic primitives implemented (not including hash functions) are:

- RSA signature verification using PKCS1.5 padding.

- RSA signature verification using PSS padding.

- ECDSA signature verification.

The RSA signature verifications rely on the big integer library from zk-Email, adding padding verification on top of the existing library.

The ECDSA implementation, however, relies on the circom-dl library for both elliptic curve operations and big integer emulation.

RSA signature verification

The Self project supports signature verification performed with RSA using both PKCS1.5 padding and PSS padding with multiple configurations.

This is motivated by the fact that different countries support various standards and key-lengths. In the following we give a high-level overview of how these

are implemented in the project. Note that, as with other signature verifications performed in this project, signatures are assumed

to be performed over hashes of an original message, therefore the variables message are always assumed to be the output of a given hash function.

RSA with PKCS1.5 padding

Two similar files verifyRsa3Pkcs1v1_5.circom and verifyRsa65537Pkcs1v1_5.circom implement signature verification

for RSA PCKS1.5 padding for signatures

generated with public exponent and respectively. They define templates i.e. template VerifyRsa65537Pkcs1v1_5(CHUNK_SIZE, CHUNK_NUMBER, HASH_SIZE)

which can be instantiated with different chunk sizes, number of chunks and hash sizes, to accommodate various key sizes and hash algorithms. Concrete values

for this parameters are given in a series of test circuits, for instance test_rsa_sha1_65537_2048.circom is intended to test signatures

signed with a key size of 2048 bits and SHA-1 with public exponent :

template VerifyRsaPkcs1v1_5Tester() {

signal input signature[35];

signal input modulus[35];

signal input message[35];

VerifyRsa65537Pkcs1v1_5(120, 35, 160)(signature, modulus, message);

}

component main = VerifyRsaPkcs1v1_5Tester();

Both verification templates call a Pkcs1v1_5Padding template (defined in Pkcs1v1_5Padding.circom) that given a message and a

modulus in input, computes the expected padded message. This is then compared to the output of the operation for a given

signature .

RSA with PSS padding

For this padding also two templates handle the public exponents and

respectively, namely rsapss3.circom and rsapss65537.circom. They both rely on the mask generation template mfg1.circom that implements a mask

as described in the PSS specification. Test show examples of concrete parameters passed to the templates to handle various key lengths, hashing functions

and salt lengths, for instance:

template VerifyRsaPss3Sig_tester() {

signal input modulus[35];

signal input signature[35];

signal input message[256];

VerifyRsaPss3Sig(120, 35, 32, 256, 2048)(modulus,signature,message);

}

component main = VerifyRsaPss3Sig_tester();

instantiates the case of 2048-bit public key length, used in combination with SHA-256 and 32 bit salt length for . Note that different from RSA PKCS1 the input message is not an array of chunks but an array of bits.

Big integers

Big integers used for ECDSA verification are implemented in the utils/crypto/bigInt directory.

An integer is represented as an array of field elements, called chunks.

This representation is parametrized by two constants:

CHUNK_SIZE: The bit length of the values in each chunk.CHUNK_NUMBER: The number of chunks used to represent the integer.

For example, a 512-bit integer can be represented using 8 chunks of 64 bits each, corresponding to CHUNK_SIZE = 64 and CHUNK_NUMBER = 8.

From now on, we denote CHUNK_NUMBER as and CHUNK_SIZE as .

Given chunks , the underlying integer is computed as:

An integer is considered overflow-free if every chunk is in the range . In this case, every -bit integer has a unique representation.

The library provides an implementation of operations on big integers, enabling arithmetic operations, comparisons, and foreign field emulation.

To optimize arithmetic operations, the library offers several overflow templates (indicated by overflow in their names).

These perform arithmetic operations without normalizing the chunks at the end.

For example:

BigAddOverflow: Computes the element-wise sum of the chunks.ScalarMultOverflow: Multiplies each chunk by a scalar value, without normalizing the result.

For overflow multiplication, the library provides two implementations:

- Standard element-wise multiplication using the limbs.

- Karatsuba algorithm, which is more efficient for specific parameter choices.

The decision of which algorithm to use is made at circuit-generation time using is_karatsuba_optimal_dl().

To assert that an integer is zero modulo some number , the prover witnesses the quotient and the remainder of the division . The circuit then asserts that:

is the big integer corresponding to zero, using BigIntIsZero.

Another example is the BigMultModP template, which computes the product of two big integers and , reduced modulo some integer .

- Compute using

BigMultOverflow. - The prover witnesses a quotient and remainder for .

- The circuit asserts that:

is the big integer corresponding to zero, using BigIntIsZero.

Elliptic curve operations

Elliptic curve operations are implemented in the utils/crypto/ec directory, and are part of the circom-dl library.

They make use of the big integer library for arithmetic operations on the base field of the curve.

Recall that an elliptic curve is defined as the set of points that satisfy the curve equation

over some base field , where and and are the curve parameters.

There are three main templates that implement useful assertions over elliptic curve points.

PointOnCurve checks if a point lies on the curve, i.e., it checks that the curve equation holds over the base field of the curve.

It does so by computing the following quantity

and then asserts that using BigIntIsZeroModP.

PointOnTangent checks if a point lies on the tangent of the curve at a given point .

Since this template is used in point doubling, the y coordinate of the checked point is negated.

First, the slope of the tangent line to the point is computed as

Then, the tangent line is evaluated at the x coordinate to get the corresponding y coordinate .

The check should pass if . Simplifying the equation, we need to assert that

holds over the base field, which is again implemented using BigIntIsZeroModP.

Lastly, PointOnLine checks if three points , , and are collinear.

Since this template is used in point addition, the y coordinate of the third point is negated.

Co-linearity is checked by asserting that the following relation

holds over the base field, again using BigIntIsZeroModP.

To compute the sum of two points and , the following steps are performed:

- the result is witnessed

- the circuit asserts that is a point on the curve using PointOnCurve.

- the circuit asserts co-linearity of , , and using PointOnLine.

To compute the doubling of a point , the following steps are performed:

- the result is witnessed

- the circuit asserts that is a point on the curve using PointOnCurve

- the circuit asserts that the point lies on the tangent line of the curve at using PointOnTangent.

Scalar multiplication is performed using an optimized windowed approach, where the scalar is split into chunks of bits, also known as the Pippenger algorithm.

For scalar multiplication with the base point , it is used instead a more efficient template, that makes use of precomputed powers of the generator.

The precomputed values are stored in the ec/powers directory, and are given for multiple standard curves.

ECDSA verification

Self supports signature verification performed with ECDSA with multiple configuration of hashes and curves. The following curves are supported: - brainpoolP224r1 - brainpoolP256r1 - brainpoolP384r1 - brainpoolP512r1 - p256 - p384 - p521

Signature verification assumes that signatures are generated over the hash of the original message, with an additional preprocessing step applied to the hash based on the following conditions: - Truncation: If the hash length exceeds or equals the scalar field size in bits, the rightmost bits are truncated. - Padding: If the hash length is shorter than the scalar field size, leading zeroes are added (left-padding) to match the required bit length.

The ECDSA signature verification accept the following inputs: - signature: Signature component , where each element is represented in bigint. - pubkey: Public key used for verify the signature, consist of elliptic curve point represented in bigint. - hashed: The hash of the message to be verified, represented as array of bits.

Below is a structured overview of the verification flow:

- The value of

hashedis transformed to the bigint representation by grouping into bits of chunk, where is chunk number that set in the template parameter, and convert each chunk to the numeric form usingBits2Numtemplate. These chunks are stored inhashedChunked. - Retrieve the order of the elliptic curve using

EllipicCurveGetOrderfunction. - Compute modular inverse of (denoted ) using

BigModInvtemplate. - Compute and using modular multiplication

BigMultModP, where is the value ofhashedChunked. - Compute using

EllipticCurveScalarGeneratorMult, where is the curve’s base point. - Compute using

EllipticCurveScalarMult. - The previous two computed points are added together (ie. ) using

EllipticCurveAdd, resulting in . - Verify that by comparing the equality of each chunks.

Overview of the Main Protocol Circuits

There are three main circuits that are used in the protocol:

- The DSC circuit verifies the signature of a DSC certificate using a CSCA certificate in the CSCA tree, and additionally generates a leaf to be appended in the DSC tree.

- The registration circuit verifies the signature of a passport using a DSC certificate in the DSC tree, and additionally generates a commitment to be stored on-chain, and a nullifier to prevent double registration of the same passport.

- The disclose circuit allows to disclose some selected information about the passport data, and generates a nullifier that can be application-specific.

DSC circuit

The purpose of the DSC circuit is to verify that a DSC certificate is signed by one of the CSCA certificates in the CSCA tree, and to generate a leaf to be appended in the DSC tree. First, it takes in input the raw CSCA bytes, and a Merkle proof of the inclusion in the CSCA tree. The bytes are packed and hashed to generate the leaf, and the Merkle root is computed using the Merkle proof. The computed Merkle root is constrained to be equal to the root which is passed as a public parameter by the verification contract.

Additionally, the public key of the CSCA is passed in input represented as an array of chunks. This additional input is verified to be consistent with one sequence of bytes in the original CSCA certificate, which is done by comparing it with a subarray of the CSCA certificate with bounds provided by the prover. The bounds are checked to be consistent with the size of the public keys of the specific algorithm parameters used.

Finally, the hash digest of the raw DSC certificate is computed, and the signature is verified using the public key of the CSCA. A commitment to the DSC certificate is also generated, computed as

dsc_leaf = Poseidon(Poseidon(Poseidon(raw_dsc), raw_dsc_len), csca_tree_leaf)

which is given in output by the circuit, and is inserted in the DSC tree.

Registration circuit

The purpose of the registration circuit is to verify that a passport data is correctly signed by a DSC certificate in the DSC tree, and to generate a commitment to the passport and a nullifier to prevent double registration of the same passport. As before, the raw DSC certificate is given in input, and its hashed is checked for inclusion in the DSC Merkle tree. The public key of the DSC certificate is also passed in input, and is verified to be consistent with the DSC certificate bytes, by comparing it with a subarray of the DSC certificate with bounds provided by the prover. As for te DSC circuit, the bounds are checked to be consistent with the size of the public keys of the specific algorithm parameters used.

The passport data and the signature are verified using the PassportVerifier template, which hashes the sections of the passport data and verifies the signature to be valid using the public key extracted from the DSC certificate.

Finally, a passport commitment is computed as

passport_leaf = Poseidon(secret, 1, Poseidon(dg1), Poseidon(eContent_hash), dsc_tree_leaf)

Additionally, the nullifier is computed as the poseidon output of the sha hash of the signed attributes. Both the commitment and the nullifier are given in output by the circuit and stored on-chain.

Disclose circuit

The purpose of the disclose circuit is to verify that some disclosed information about a passport are consistent with the previously verified passport data. To do so, the circuit verifies that the prover knows some data and a secret key such that the computed commitment is in the passports Merkle tree. After verifying the commitment, it checks that the disclosed data are consistent with the passport data. The prover can select which data or property about the passport to disclose using selector inputs. Finally, the circuit generates a nullifier, which is computed together with a “scope”, which could be the name of the app or event that we are disclosing our information to. The nullifier is simply a poseidon of the user’s secret and the scope, and it is used to ensure that the same user can perform only one disclosure for each scope.

Overview of the Self Smart Contracts

The Self contracts consist of three main components that handle different aspects of passport verification and identity management.

The IdentityVerificationHub manages all interactions between users’ zero-knowledge proofs and the on-chain identity registry. It maintains mappings to multiple verifier contracts to handle different types of proof circuits. When users submit proofs, the hub routes them to the appropriate verifier contract and, upon successful verification, triggers the IdentityRegistry to record the user’s commitment.

The IdentityRegistry contract is the on-chain ledger for user identity commitments. It implements a Lean Incremental Merkle Tree to efficiently store and prove the existence of identity commitments. Each commitment in the tree corresponds to a verified passport, and the registry maintains a mapping of nullifiers to prevent double registration of the same passport. The registry also stores and manages two important Merkle roots: - The OFAC root to enforce sanctions checks - The CSCA root to verify the authenticity of DSC certificates

Every time a commitment is added, removed, or updated, the registry generates and timestamps a new Merkle root to create an auditable history of the system’s state.

The PassportAirdropRoot contract demonstrates how applications can build on top of this infrastructure. It verifies that users possess valid passports through Verify Commitment (VC) and Disclose proofs. These proofs are verified against predetermined parameters such as - a specific scope (a unique identifier for the application), - an attestation identifier (a unique identifier for the type of attestation, e.g., an e-passport attestation), - and — optionally — a root timestamp from the identity registry.

The PassportAirdropRoot contract implements its own nullifier tracking to prevent that users can register their address for the airdrop twice. Thus, it provides an instructive example of how applications can maintain their own state while leveraging Self’s core verification infrastructure.

To allow for future improvements while maintaining stable contract addresses and state, Self’s smart contracts make use of the Universal Upgradeable Proxy Standard (UUPS). Access between contracts is strictly controlled, with the IdentityRegistry only accepting commitment registrations from the IdentityVerificationHub, and administrative functions being restricted to an authorized contract-owner address.

IdentityVerificationHub

The IdentityVerificationHub contract serves as the central verification point for all zero-knowledge proofs in the system. It manages verifier mappings to handle different types of proofs and coordinates with the IdentityRegistry for commitment storage.

The contract maintains two primary verifier mappings:

- sigTypeToRegisterCircuitVerifiers: Maps signature types to their corresponding register circuit verifiers

- sigTypeToDscCircuitVerifiers: Maps signature types to their corresponding DSC circuit verifiers

These mappings enable the hub to route proofs to the appropriate external verifier contracts based on the signature type being verified. When a user submits a proof, the hub: 1. Identifies the correct verifier contract from its mappings 2. Calls the verifier with the proof and public inputs 3. Upon successful verification, instructs the IdentityRegistry to store the user’s commitment

For VC and Disclose proofs, the hub uses a dedicated vcAndDiscloseCircuitVerifier to verify inclusion proofs. These proofs demonstrate that a previously registered passport commitment exists in the IdentityRegistry’s Merkle tree and satisfies specific predicates.

The hub also provides utility functions to convert the output of vcAndDisclose circuits into a readable format, which allows external contracts to access and verify specific passport attributes.

IdentityRegistry

The IdentityRegistry contract is the on-chain storage layer for identity commitments, and maintains several important data structures:

- An Incremental Merkle Tree of identity commitments

- A mapping of nullifiers to prevent double registration

- A mapping of root timestamps

- External Merkle roots:

ofacRootandcscaRoot

When the IdentityVerificationHub verifies a proof successfully, it calls the registry’s registerCommitment function to store the new commitment in the Merkle tree. Before insertion, the contract checks that the associated nullifier hasn’t been used before, preventing the same passport from being registered multiple times.

Each time the Merkle tree is modified through commitment registration, the contract generates a new root and records its timestamp. This creates a historical record of valid roots, which is necessary for proving inclusion at specific points in time. External contracts can verify these timestamps using the rootTimestamps mapping.

The registry also maintains two external Merkle roots that are important for the verification process:

- ofacRoot: Used for sanctions compliance checks

- cscaRoot: Used to verify the authenticity of DSC certificates in passports

In addition, the contract includes several “development functions” (prefixed with dev) to provide the contract owner with certain admin capabilities for testing, maintenance, and emergency interventions:

devAddIdentityCommitment: Forces the addition of an identity commitment without proof verificationdevUpdateCommitment: Updates an existing commitment in the Merkle treedevRemoveCommitment: Removes a commitment from the Merkle treedevAddDscKeyCommitment: Forces the addition of a DSC key commitmentdevUpdateDscKeyCommitment: Updates an existing DSC key commitmentdevRemoveDscKeyCommitment: Removes an existing DSC key commitmentdevChangeNullifierState: Directly modifies the state of a nullifierdevChangeDscKeyCommitmentState: Directly modifies the registration state of a DSC key commitment

Many of the registry’s functions come with strict access control. Only the IdentityVerificationHub can register new commitments, and only the contract owner can update the OFAC/CSCA roots or call the above-mentioned dev functions.

As a summary, the registry represents the system’s storage layer, focusing exclusively on identity-commitment storage and Merkle root management while delegating all proof verification logic to the IdentityVerificationHub.

PassportAirdropRoot

The PassportAirdropRoot contract demonstrates a practical implementation of the Self system. More specifically, it provides a reference implementation for token airdrops based on passport verification, and shows how to securely register users for an airdrop while ensuring each passport can only be used once to claim tokens.

For each airdrop claim attempt, the contract performs the following steps:

1. Verifies the VC and Disclose proof using the IdentityVerificationHub

2. Validates that the proof’s scope matches the expected value (e.g., “airdrop-v1”)

3. Confirms the attestation identifier matches the expected value (typically “1” for e-passports)

4. Checks that the nullifier (derived from the passport and airdrop scope) hasn’t been used before

5. If a snapshot timestamp is specified, verifies that the Merkle root used in the proof existed in the IdentityRegistry at that time

6. Records the nullifier to prevent double-claiming of the airdrop with the same passport

7. Emits a UserIdentifierRegistered event containing the user’s identifier for the airdrop distribution

Thus, the contract provides an instructive example of how projects can build on top of the core Self infrastructure while maintaining their own application-specific state and verification requirements.

Proof Delegation Using TEEs

Due to the high number of constraints of its circuits, Self has users delegate their zero-knowledge proof generation to Trusted Execution Environments (TEEs). This way, even small constrained user devices can make use of the Self service. This section describes what TEEs are, and how the proof delegation protocol works.

Trusted Execution With AWS Nitro Enclaves

A Trusted Execution Environment (TEE) is a secure, isolated area of a processor that guarantees integrity for the code it runs and confidentiality for the data it handles. This isolation means even the host operating system cannot see or tamper with the TEE’s internal state.

AWS Nitro Enclaves offer TEEs on Amazon EC2 instances by providing:

- Dedicated vCPUs and Memory: An enclave is allocated specific hardware resources, separated from the host OS.

- Nitro Secure Module (NSM): A hardware component that provides secure randomness, cryptographic operations, and remote attestation capabilities.

- Minimal Attack Surface: Since the only way for the host to communicate with its enclave is via a vsock, potential attack vectors are greatly reduced.

In essence, Nitro Enclaves allow users to offload sensitive tasks, such as key management, zero-knowledge proof generation, and cryptographic attestations—into an isolated environment.

Other applications can trust the Nitro enclave’s output thanks to its attestations. Attestations are cryptographically signed documents, generated in this case by the Nitro Secure Module (NSM), that proves:

- TEE Integrity: The measurement (hash) of the enclave’s file system, including the application code, matches an expected version.

- Session Binding: It embeds contextual data—such as ephemeral public keys, nonces, and user data to ensure the session is unique and not replayed.

Attestations are signed as part of AWS public-key’s infrastructure, where the Nitro secure module public key is itself signed by other AWS keys, eventually signed by one of AWS root keys, forming what is called a certificate chain.

By verifying an attestation and its certificate chain, a client knows it is communicating with a genuine TEE running the intended code. As such, it can, for example, encrypt its user data to the enclave without exposing them to anyone else, including the cloud operators.

High-Level Proof Delegation Flow

Self’s TEE service produces zero-knowledge proofs on behalf of clients. Clients rely on the enclave to compute proofs while keeping the inputs private. The protocol is implemented in the following high-level steps:

- Both the user and the enclave perform an ephemeral ECDH key exchange to establish an encrypted channel for subsequent requests.

- Proof generations are queued up, and clients must check the result asynchronously.

TEE server initialization. When the TEE server (enclave) starts:

- It initializes the communication channel with the NSM device, giving it access to randomness and attestation services.

- Launches an RPC server to handle incoming requests from the client (proxied through the host).

- Connects to a database to record proof-generation requests and statuses (proxied through the host).

Self runs three Nitro Enclave instances—one per circuit type: register, dsc, and disclose.

Hello endpoint. Once the client wants to generate a proof, it requests a new session with the enclave by hitting a “hello” RPC endpoint (run within the enclave). The endpoint performs the following steps:

- parses the client request as

(uuid, U)whereuuidis a unique request ID andUis the client’s public key - the server produces an ephemeral keypair

- the server produces an attestation on the user’s public key and the ephemeral keypair

- the server performs a key exchange using both keys, derives the shared secret, and stores it along with the uuid in memory

- the server returns the attestation

Request endpoint. Once this session is established, the client can send a unique proof generation (as each proof generation is associated with a unique uuid) by hitting the request endpoint. The endpoint performs the following steps:

- parses the client request as

(uuid, C, onchain)whereCis the encrypted request body (containing an IV/nonce, a ciphertext, and an authentication tag for AES-GCM), andonchaindetermines if the proof is eventually submitted to a Celo smart contract or some backend SDK - retrieves the shared secret associated with the

uuidand decrypts the ciphertext - parses the circuit name from the encrypted request (i.e.

register,dsc, ordisclose) - inserts a record into the database (tying it to

uuid) and triggers the async proof-generation pipeline. - returns the

uuidto the client indicating the request was accepted

The client can then use uuid to track the status of the proof generation.

Async proof-generation process. Once the TEE accepts a request, which includes the inputs necessary for generating proof and the proof type, it runs a pipeline to produce the requested zero-knowledge proof in the background. This process has three stages:

- Store User Inputs. Saves both private and public inputs to

inputs.jsonin a temporary folder namedtmp_uuid. Update the request status toPendingin DB. - Generate Witnesses. Use the circuit-specific witness generator (e.g., in a folder matching the circuit name) to produce

output.wtns. Update the request status toWitnessesGeneratedin DB. - Generate Proof. Invoke rapidsnark to generate the final proof (

proof.json) fromoutput.wtns. Also record public inputs/outputs inpublic_inputs.json. Update the request status toProofGenerated, while saving both the public inputs and the generated proof in DB.

If any error occurs, the temporary folder is removed. Similarly, after successful proof generation, the tmp_uuid folder is deleted to save disk space. However, there is currently no recovery mechanism if the TEE server restarts mid-pipeline; the request must then be resubmitted under a new uuid.

Proof relayer. A separate service, called a Proof Relayer, monitors the database for newly generated proofs. It then submits these proofs to the designated endpoint: either the Celo Network (on-chain transaction) or a Backend SDK (an off-chain service or API).

By offloading submission to a relayer, the client does not need to remain connected or manually retrieve and send the proof by themselves.